| CV |

Email |

Google Scholar |

|

I am a first-year Ph.D. student at the Robotics Institute at Carnegie Mellon University, advised by Guanya Shi and Changliu Liu. Previously, I received my Bachelor's degree in computer science at Shanghai Jiao Tong University, advised by Weinan Zhang. I also spent time at Microsoft Research Asia. As a robotics researcher, my goal is to challenge conventional notions of what robots can achieve. My focus is on developing intelligent robots that possess intelligence, generalizability, agility and safety. To do so, I am devoted to develop learning-based methods that scale with the computation and data, and incoperate structures and priors only in necessary conditions to achieve more data-efficient training and ensure the safety and robustness. Email: tairanh [AT] andrew.cmu.edu |

|

webpage |

pdf |

abstract |

bibtex |

arXiv |

video |

media (ieee spectrum)

We present Human to Humanoid (H2O), a reinforcement learning (RL) based framework that enables real-time whole-body teleoperation of a full-sized humanoid robot with only an RGB camera. To create a large-scale retargeted motion dataset of human movements for humanoid robots, we propose a scalable ''sim-to-data" process to filter and pick feasible motions using a privileged motion imitator. Afterwards, we train a robust real-time humanoid motion imitator in simulation using these refined motions and transfer it to the real humanoid robot in a zero-shot manner. We successfully achieve teleoperation of dynamic whole-body motions in real-world scenarios, including walking, back jumping, kicking, turning, waving, pushing, boxing, etc. To the best of our knowledge, this is the first demonstration to achieve learning-based real-time whole-body humanoid teleoperation.

@inproceedings{he2024learning,

author = {He, Tairan and Luo, Zhengyi and Xiao, Wenli and Zhang, Chong and Kitani, Kris and Liu, Changliu and Shi, Guanya},

title = {Learning Human-to-Humanoid Real-Time Whole-Body Teleoperation},

booktitle = {arXiv},

year = {2024},

}

|

|

|

webpage |

pdf |

abstract |

bibtex |

arXiv |

code |

video |

media (ieee spectrum)

Legged robots navigating cluttered environments must be jointly agile for efficient task execution and safe to avoid collisions with obstacles or humans. Existing studies either develop conservative controllers (< 1.0 m/s) to ensure safety, or focus on agility without considering potentially fatal collisions. This paper introduces Agile But Safe (ABS), a learning-based control framework that enables agile and collision-free locomotion for quadrupedal robots. ABS involves an agile policy to execute agile motor skills amidst obstacles and a recovery policy to prevent failures, collaboratively achieving high-speed and collision-free navigation. The policy switch in ABS is governed by a learned control-theoretic reach-avoid value network, which also guides the recovery policy as an objective function, thereby safeguarding the robot in a closed loop. The training process involves the learning of the agile policy, the reach-avoid value network, the recovery policy, and an exteroception representation network, all in simulation. These trained modules can be directly deployed in the real world with onboard sensing and computation, leading to high-speed and collision-free navigation in confined indoor and outdoor spaces with both static and dynamic obstacles.

@inproceedings{he2024agile,

author = {He, Tairan and Zhang, Chong and Xiao, Wenli and He, Guanqi and Liu, Changliu and Shi, Guanya},

title = {Agile But Safe: Learning Collision-Free High-Speed Legged Locomotion},

booktitle = {arXiv},

year = {2024},

}

|

|

|

webpage |

pdf |

abstract |

bibtex |

arXiv |

code |

video

A critical goal of autonomy and artificial intelligence is enabling autonomous robots to rapidly adapt in dynamic and uncertain environments. Classic adaptive control and safe control provide stability and safety guarantees but are limited to specific system classes. In contrast, policy adaptation based on reinforcement learning (RL) offers versatility and generalizability but presents safety and robustness challenges. We propose SafeDPA, a novel RL and control framework that simultaneously tackles the problems of policy adaptation and safe reinforcement learning. SafeDPA jointly learns adaptive policy and dynamics models in simulation, predicts environment configurations, and fine-tunes dynamics models with few-shot real-world data. A safety filter based on the Control Barrier Function (CBF) on top of the RL policy is introduced to ensure safety during real-world deployment. We provide theoretical safety guarantees of SafeDPA and show the robustness of SafeDPA against learning errors and extra perturbations. Comprehensive experiments on (1) classic control problems (Inverted Pendulum), (2) simulation benchmarks (Safety Gym), and (3) a real-world agile robotics platform (RC Car) demonstrate great superiority of SafeDPA in both safety and task performance, over state-of-the-art baselines. Particularly, SafeDPA demonstrates notable generalizability, achieving a 300% increase in safety rate compared to the baselines, under unseen disturbances in real-world experiments.

@article{xiao2023safe,

title={Safe Deep Policy Adaptation},

author={Xiao, Wenli and He, Tairan and Dolan, John and Shi, Guanya},

journal={arXiv preprint arXiv:2310.08602},

year={2023}

}

|

|

|

pdf |

abstract |

bibtex |

arXiv

An attached arm can significantly increase the applicability of legged robots to several mobile manipulation tasks that are not possible for the wheeled or tracked counterparts. The standard control pipeline for such legged manipulators is to decouple the controller into that of manipulation and locomotion. However, this is ineffective and requires immense engineering to support coordination between the arm and legs, error can propagate across modules causing non-smooth unnatural motions. It is also biological implausible where there is evidence for strong motor synergies across limbs. In this work, we propose to learn a unified policy for whole-body control of a legged manipulator using reinforcement learning. We propose Regularized Online Adaptation to bridge the Sim2Real gap for high-DoF control, and Advantage Mixing exploiting the causal dependency in the action space to overcome local minima during training the whole-body system. We also present a simple design for a low-cost legged manipulator, and find that our unified policy can demonstrate dynamic and agile behaviors across several task setups.

@article{chen2023progressive,

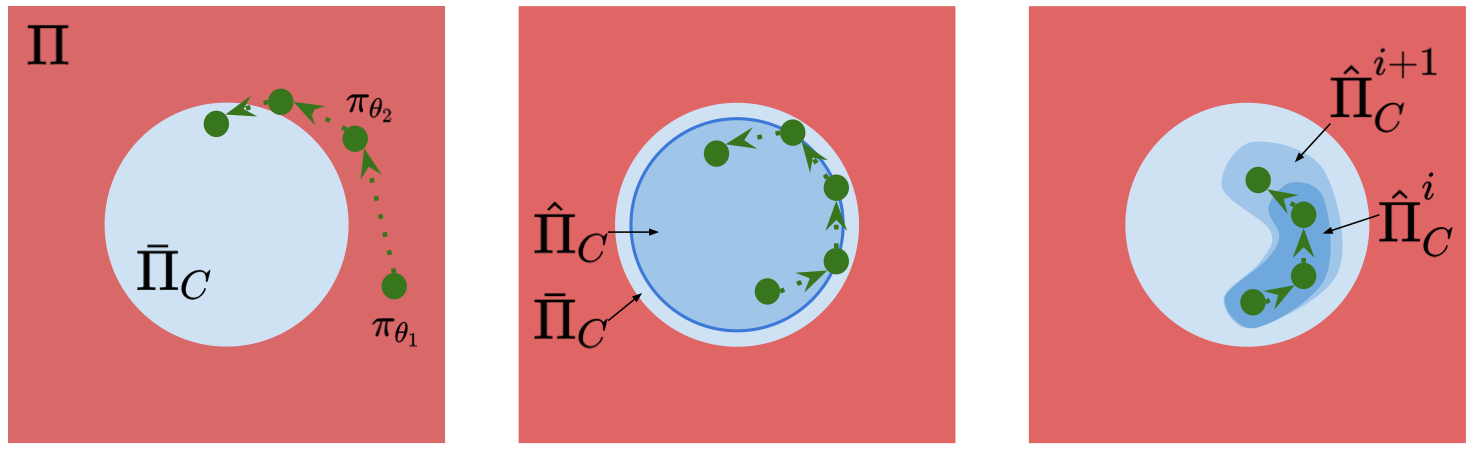

title={Progressive Adaptive Chance-Constrained Safeguards for Reinforcement Learning},

author={Chen, Zhaorun and Chen, Binhao and He, Tairan and Gong, Liang and Liu, Chengliang},

journal={arXiv preprint arXiv:2310.03379},

year={2023}

}

|

|

|

pdf |

abstract |

bibtex |

arXiv

Despite the tremendous success of Reinforcement Learning (RL) algorithms in simulation environments, applying RL to real-world applications still faces many challenges. A major concern is safety, in another word, constraint satisfaction. State-wise constraints are one of the most common constraints in real-world applications and one of the most challenging constraints in Safe RL. Enforcing state-wise constraints is necessary and essential to many challenging tasks such as autonomous driving, robot manipulation. This paper provides a comprehensive review of existing approaches that address state-wise constraints in RL. Under the framework of State-wise Constrained Markov Decision Process (SCMDP), we will discuss the connections, differences, and trade-offs of existing approaches in terms of (i) safety guarantee and scalability, (ii) safety and reward performance, and (iii) safety after convergence and during training. We also summarize limitations of current methods and discuss potential future directions.

@inproceedings{ijcai2023p763,

title = {State-wise Safe Reinforcement Learning: A Survey},

author = {Zhao, Weiye and He, Tairan and Chen, Rui and Wei, Tianhao and Liu, Changliu},

booktitle = {Proceedings of the Thirty-Second International Joint Conference on

Artificial Intelligence, {IJCAI-23}},

publisher = {International Joint Conferences on Artificial Intelligence Organization},

editor = {Edith Elkind},

pages = {6814--6822},

year = {2023},

month = {8},

note = {Survey Track},

doi = {10.24963/ijcai.2023/763},

url = {https://doi.org/10.24963/ijcai.2023/763},

}

|

|

webpage |

pdf |

abstract |

bibtex |

arXiv |

code

Visual imitation learning enables reinforcement learning agents to learn to be- have from expert visual demonstrations such as videos or image sequences, with- out explicit, well-defined rewards. Previous research either adopted supervised learning techniques or induce simple and coarse scalar rewards from pixels, ne- glecting the dense information contained in the image demonstrations. In this work, we propose to measure the expertise of various local regions of image sam- ples, or called patches, and recover multi-dimensional patch rewards accordingly. Patch reward is a more precise rewarding characterization that serves as a fine- grained expertise measurement and visual explainability tool. Specifically, we present Adversarial Imitation Learning with Patch Rewards (PatchAIL), which employs a patch-based discriminator to measure the expertise of different local parts from given images and provide patch rewards. The patch-based knowledge is also used to regularize the aggregated reward and stabilize the training. We evaluate our method on DeepMind Control Suite and Atari tasks. The experiment results have demonstrated that PatchAIL outperforms baseline methods and pro- vides valuable interpretations for visual demonstrations.

@article{liu2023visual,

title={Visual imitation learning with patch rewards},

author={Liu, Minghuan and He, Tairan and Zhang, Weinan and Yan, Shuicheng and Xu, Zhongwen},

journal={arXiv preprint arXiv:2302.00965},

year={2023}

}

|

|

|

pdf |

abstract |

bibtex |

arXiv

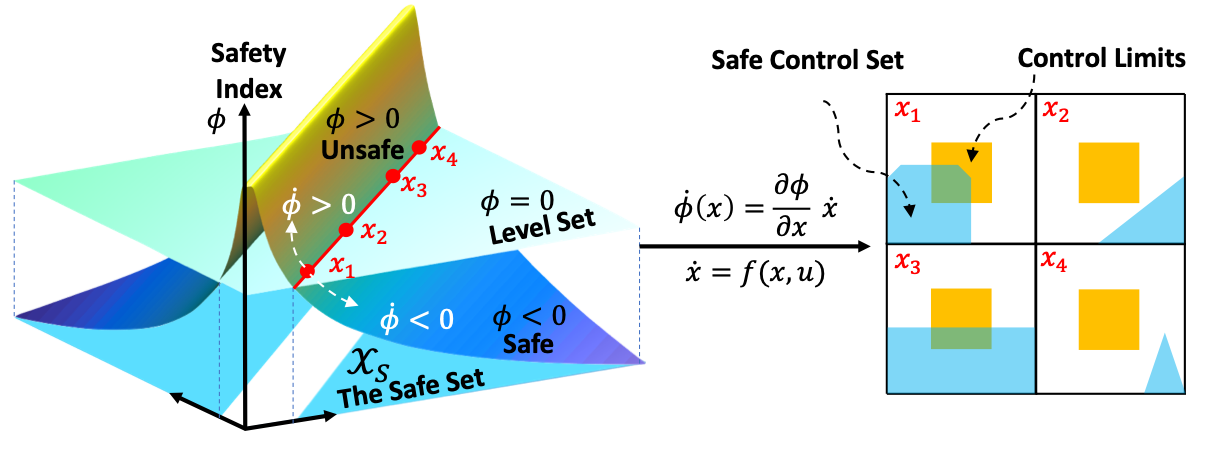

Control systems often need to satisfy strict safety requirements. Safety index provides a handy way to evaluate the safety level of the system and derive the resulting safe control policies. However, designing safety index functions under control limits is difficult and requires a great amount of expert knowledge. This paper proposes a framework for synthesizing the safety index for general control systems using sum-of-squares programming. Our approach is to show that ensuring the non-emptiness of safe control on the safe set boundary is equivalent to a local manifold positiveness problem. We then prove that this problem is equivalent to sum-of-squares programming via the Positivstellensatz of algebraic geometry. We validate the proposed method on robot arms with different degrees of freedom and ground vehicles. The results show that the synthesized safety index guarantees safety and our method is effective even in high-dimensional robot systems.

@inproceedings{zhao2023safety,

title={Safety index synthesis via sum-of-squares programming},

author={Zhao, Weiye and He, Tairan and Wei, Tianhao and Liu, Simin and Liu, Changliu},

booktitle={2023 American Control Conference (ACC)},

pages={732--737},

year={2023},

organization={IEEE}

}

|

|

pdf |

abstract |

bibtex |

arXiv

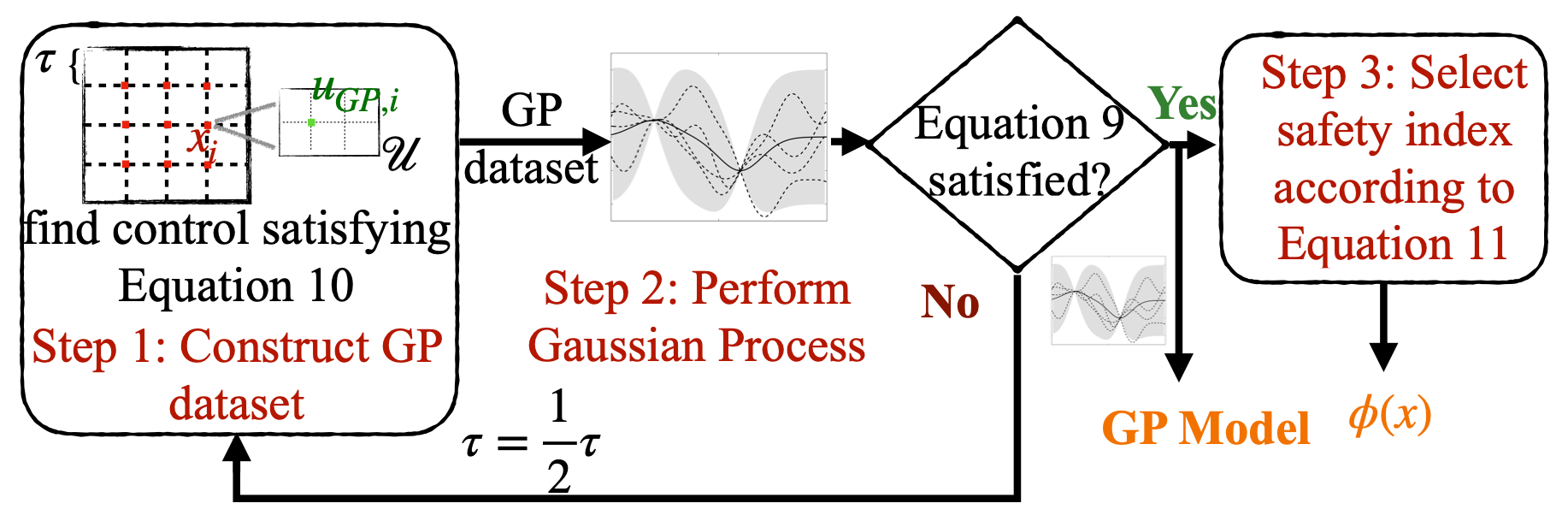

Safety is one of the biggest concerns to applying reinforcement learning (RL) to the physical world. In its core part, it is challenging to ensure RL agents persistently satisfy a hard state constraint without white-box or black-box dynamics models. This paper presents an integrated model learning and safe control framework to safeguard any agent, where its dynamics are learned as Gaussian processes. The proposed theory provides (i) a novel method to construct an offline dataset for model learning that best achieves safety requirements; (ii) a parameterization rule for safety index to ensure the existence of safe control; (iii) a safety guarantee in terms of probabilistic forward invariance when the model is learned using the aforementioned dataset. Simulation results show that our framework guarantees almost zero safety violation on various continuous control tasks.

@inproceedings{zhao2023probabilistic,

title={Probabilistic safeguard for reinforcement learning using safety index guided gaussian process models},

author={Zhao, Weiye and He, Tairan and Liu, Changliu},

booktitle={Learning for Dynamics and Control Conference},

pages={783--796},

year={2023},

organization={PMLR}

}

|

|

pdf |

abstract |

bibtex |

arXiv

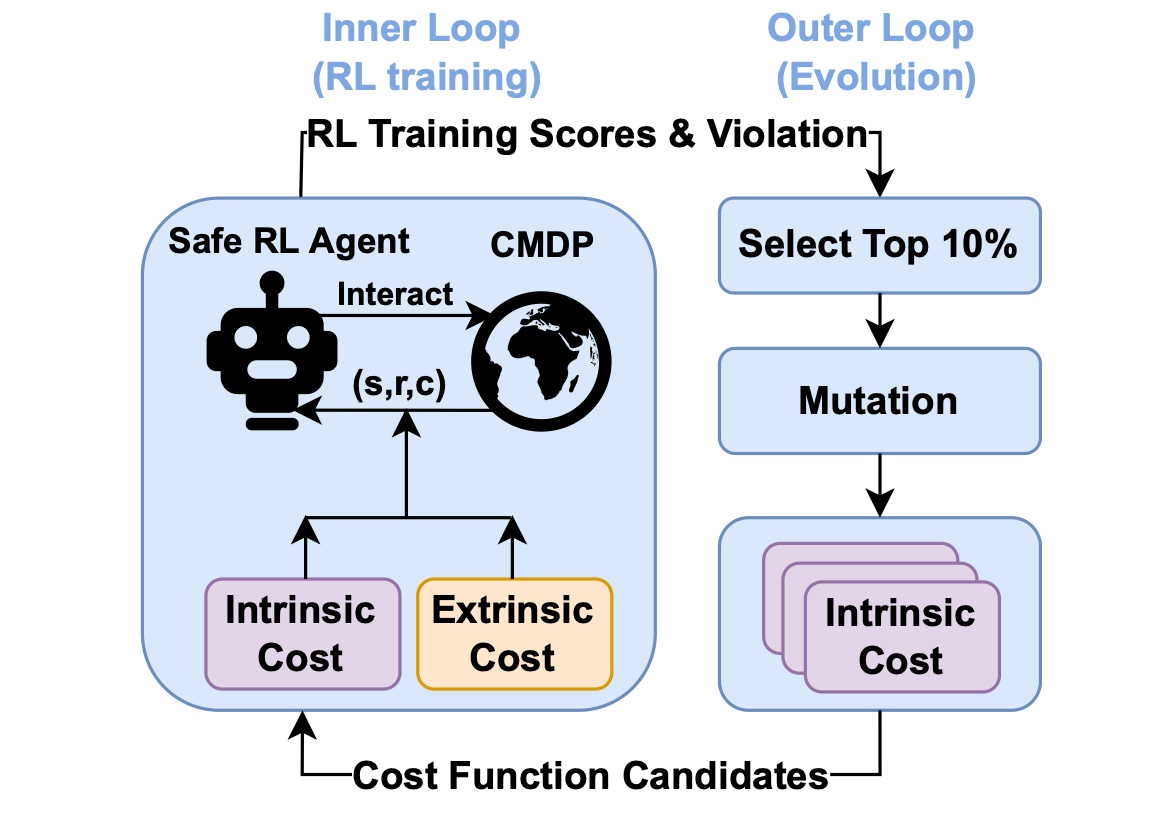

Safety is a critical hurdle that limits the application of deep reinforcement learning (RL) to real-world control tasks. To this end, constrained reinforcement learning leverages cost functions to improve safety in constrained Markov decision processes. However, such constrained RL methods fail to achieve zero violation even when the cost limit is zero. This paper analyzes the reason for such failure, which suggests that a proper cost function plays an important role in constrained RL. Inspired by the analysis, we propose AutoCost, a simple yet effective framework that automatically searches for cost functions that help constrained RL to achieve zero-violation performance. We validate the proposed method and the searched cost function on the safe RL benchmark Safety Gym. We compare the performance of augmented agents that use our cost function to provide additive intrinsic costs with baseline agents that use the same policy learners but with only extrinsic costs. Results show that the converged policies with intrinsic costs in all environments achieve zero constraint violation and comparable performance with baselines.

@article{he2023autocost,

title={Autocost: Evolving intrinsic cost for zero-violation reinforcement learning},

author={He, Tairan and Zhao, Weiye and Liu, Changliu},

journal={arXiv preprint arXiv:2301.10339},

year={2023}

}

|

|

webpage |

pdf |

abstract |

bibtex |

arXiv |

code

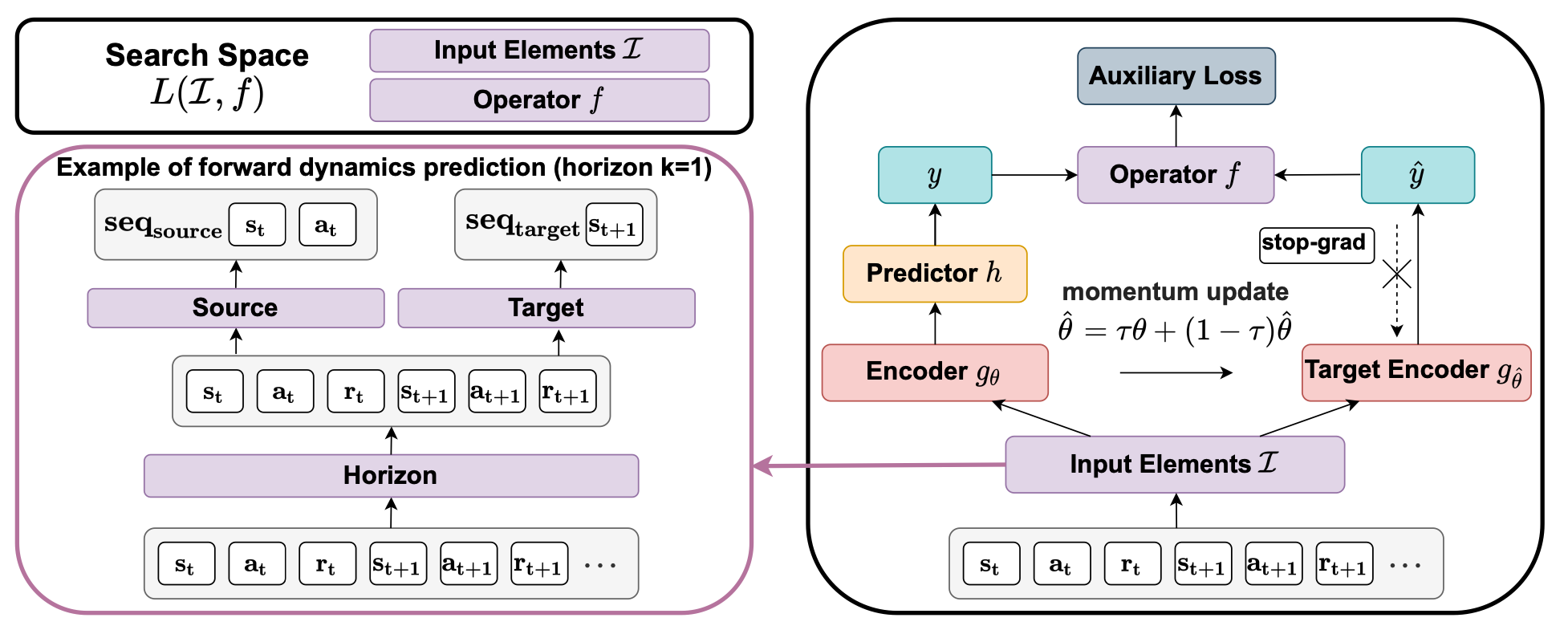

A good state representation is crucial to solving complicated reinforcement learning (RL) challenges. Many recent works focus on designing auxiliary losses for learning informative representations. Unfortunately, these handcrafted objectives rely heavily on expert knowledge and may be sub-optimal. In this paper, we propose a principled and universal method for learning better representations with auxiliary loss functions, named Automated Auxiliary Loss Search (A2LS), which automatically searches for top-performing auxiliary loss functions for RL. Specifically, based on the collected trajectory data, we define a general auxiliary loss space of size 7.5×1020 and explore the space with an efficient evolutionary search strategy. Empirical results show that the discovered auxiliary loss (namely, A2-winner) significantly improves the performance on both high-dimensional (image) and low-dimensional (vector) unseen tasks with much higher efficiency, showing promising generalization ability to different settings and even different benchmark domains. We conduct a statistical analysis to reveal the relations between patterns of auxiliary losses and RL performance.

@inproceedings{zhao2021model,

title={Model-free safe control for zero-violation reinforcement learning},

author={Zhao, Weiye and He, Tairan and Liu, Changliu},

booktitle={5th Annual Conference on Robot Learning},

year={2021}

}

|

|

pdf |

abstract |

bibtex |

openreview |

code

While deep reinforcement learning (DRL) has impressive performance in a variety of continuous control tasks, one critical hurdle that limits the application of DRL to physical world is the lack of safety guarantees. It is challenging for DRL agents to persistently satisfy a hard state constraint (known as the safety specification) during training. On the other hand, safe control methods with safety guarantees have been extensively studied. However, to synthesize safe control, these methods require explicit analytical models of the dynamic system; but these models are usually not available in DRL. This paper presents a model-free safe control strategy to synthesize safeguards for DRL agents, which will ensure zero safety violation during training. In particular, we present an implicit safe set algorithm, which synthesizes the safety index (also called the barrier certificate) and the subsequent safe control law only by querying a black-box dynamic function (e.g., a digital twin simulator). The theoretical results indicate the implicit safe set algorithm guarantees forward invariance and finite-time convergence to the safe set. We validate the proposed method on the state-of-the-art safety benchmark Safety Gym. Results show that the proposed method achieves zero safety violation and gains 95 cumulative reward compared to state-of-the-art safe DRL methods. Moreover, it can easily scale to high-dimensional systems.

@inproceedings{zhao2021model,

title={Model-free safe control for zero-violation reinforcement learning},

author={Zhao, Weiye and He, Tairan and Liu, Changliu},

booktitle={5th Annual Conference on Robot Learning},

year={2021}

}

|

|

|

pdf |

abstract |

bibtex |

arXiv |

code

A good state representation is crucial to solving complicated reinforcement learning (RL) challenges. Many recent works focus on designing auxiliary losses for learning informative representations. Unfortunately, these handcrafted objectives rely heavily on expert knowledge and may be sub-optimal. In this paper, we propose a principled and universal method for learning better representations with auxiliary loss functions, named Automated Auxiliary Loss Search (A2LS), which automatically searches for top-performing auxiliary loss functions for RL. Specifically, based on the collected trajectory data, we define a general auxiliary loss space of size 7.5×1020 and explore the space with an efficient evolutionary search strategy. Empirical results show that the discovered auxiliary loss (namely, A2-winner) significantly improves the performance on both high-dimensional (image) and low-dimensional (vector) unseen tasks with much higher efficiency, showing promising generalization ability to different settings and even different benchmark domains. We conduct a statistical analysis to reveal the relations between patterns of auxiliary losses and RL performance.

@inproceedings{zhao2021model,

title={Model-free safe control for zero-violation reinforcement learning},

author={Zhao, Weiye and He, Tairan and Liu, Changliu},

booktitle={5th Annual Conference on Robot Learning},

year={2021}

}

|

|

|

Android Code |

iOS Code |

Project Page |

Farewell Video

A carefree forum platform for SJTUers sharing and talking with anonymous identity. More than 10000+ users used「无可奉告」in the SJTU campus. |

|

International Joint Conference on Artificial Intelligence (IJCAI) 2024

International Conference on Machine Learning (ICML), 2024 International Conference on Learning Representations (ICLR), 2024 IEEE Conference on Decision and Control (CDC), 2023 Conference on Neural Information Processing Systems (NeurIPS), 2023 Learning for Dynamics & Control Conference (L4DC) 2023 AAAI Conference on Artificial Intelligence (AAAI) 2023, 2024 Conference on Robot Learning (CoRL) 2022, 2023 |

|

|